Data science platform for developing artificial intelligence systems in the automotive industry

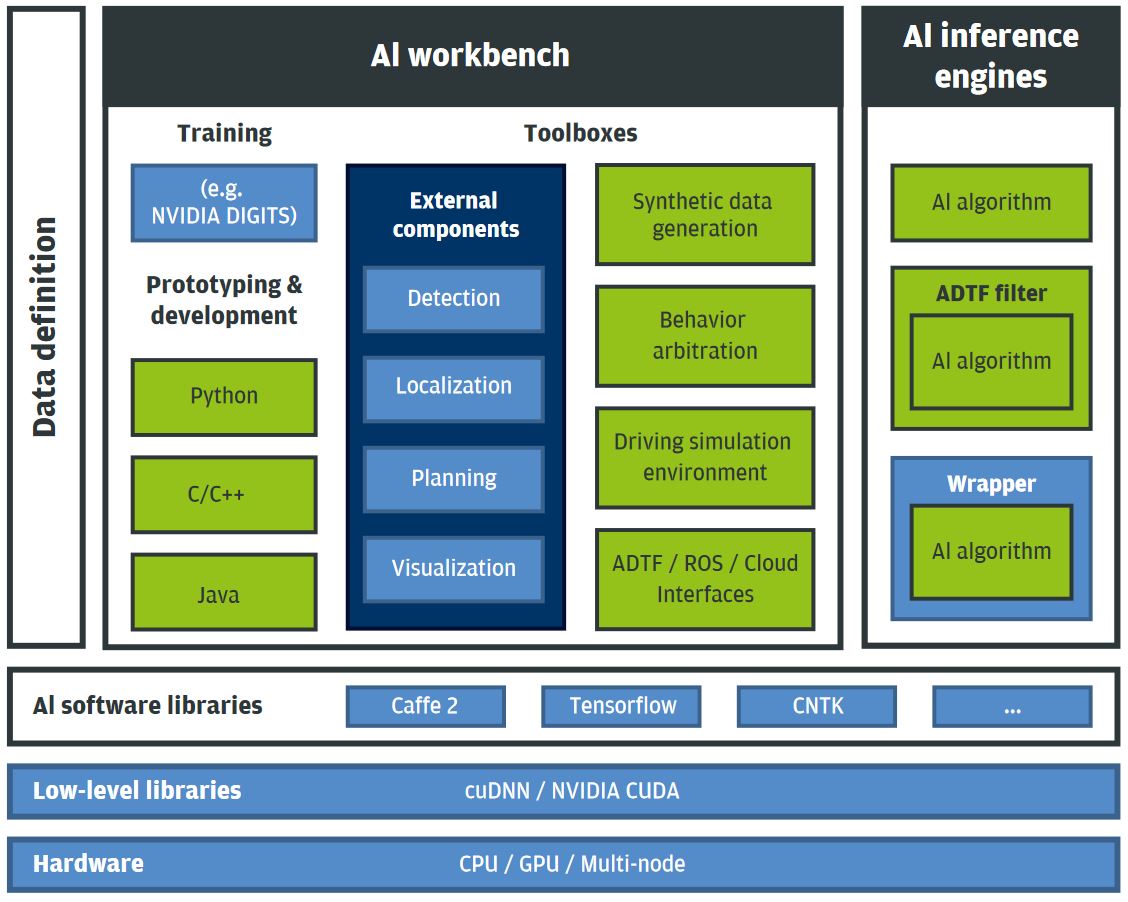

Elektrobit is committed to developing systems which enable our customers to reliably and safely deploy novel cutting-edge technologies in the auto-motive landscape. Data science and artificial intelligence are now shaping the future of the automotive industry, where the dream of self-driving cars is becoming more and more a reality. Elektrobit’s data science platform, with its block diagram illustrated in Figure 1, is designed for building artificial intelligence engines for various automotive areas such as automated driving systems.

We want to help to answer the following questions:

What is the role of artificial intelligence for cars in the automotive industry?

And why is a robust framework for developing such systems necessary?

The AI digital era

Artificial intelligence (AI) describes a machine which mimics human cognitive functions, such as learning and problem solving. AI received its first definition once humans developed the digital computing devices that made it possible and, just like any other digital technology, it has ridden waves of hype and gloom. Presently, artificial intelligence is poised to unleash the next wave of digital disruption, as we are already seeing real-life benefits in several domains such as computer vision, robotics, automotive development, and language processing. Machines powered by AI can now perform many tasks, such as recognizing complex patterns, synthesizing information, drawing conclusions, and forecasting.

Although the successes of AI are significant, it is worth remembering that it also has limitations. For example, one major criticism of many AI systems is that they are often regarded as black boxes, which only map a relationship between input and output variables, based on a training data set. This raises some concerns regarding the ability of the system to generalize to situations that were not present in the training data set, and regarding the circumstance that it is often hard to gain true insight into the problem and the nature of the solution.

Interest in AI boomed again in the 21st century, due to advances in fields like deep learning, under-pinned by faster computers and big-data availability. All these advances have been facilitated by the ever-increasing computing power of classical processor units, but even more by the powerful graphics processing units (GPUs), which can process images 40 to 80 times faster than a normal processor. This has enabled the training speed of artificial intelligence systems to improve five or even six times in each of the last two years.

The architecture

The architecture of Elektrobit’s data science platform for artificial intelligence engines, depicted in Figure 1, follows the two major operations from a deep learning design workflow: training and inference, along with the additional layers for hardware integration and performance improvement. The Workbench is responsible for the training, prototyping, and development of the system, by leveraging on existing methods and tools, and considering AI algorithms as toolboxes within the platform. The training itself feeds the previously defined data to the network, and allows it to learn a new capability by reinforcing correct predictions and correcting the wrong ones. Examples of toolboxes available in Elektrobit’s data science platform for artificial intelligence engines: Synthetic data generator: artificial generation of synthetic data using our Generative One-Shot Learning algorithm,

- Driving context understanding: classification of the driving context from grid-fusion information,

- Behavior arbitration: driving context understanding and strategy optimization from real-world grid representations,

- Driving simulation environment: training, evaluation, and testing of AI algorithms in a virtual simulator such as Microsoft’s AirSim, and

- ADTF/ROS/Cloud interfaces: interfacing with automotive and robotics frameworks (EB Assist ADTF or the Robotics Operating System (ROS)) as well as the possibility to deploy training and evaluation jobs in cloud environments.

The inference engines represent application deployment wrappers for trained models. For example, one such wrapper is the EB Assist ADTF (Automotive Data and Time-triggered Framework) component for driving context understanding.

The AI open source software libraries we have used as a basis are Caffe2, Tensorflow and the Cognitive Neural ToolKit (CNTK). These were chosen as the three best performers out of a performance evaluation comparison.

Building artificial intelligence for cars

In the automotive industry, one of the most important aspects in building software modules is the further possibility of code industrialization and its integration in vehicles embedded platforms.

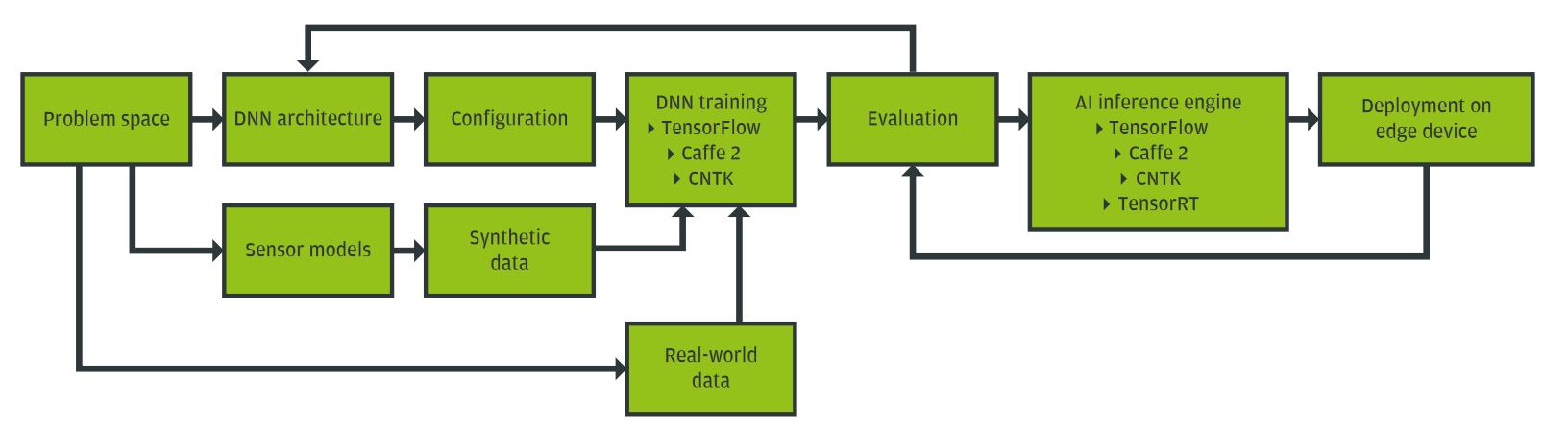

Developing AI algorithms in Elektrobit’s data science platform follows the workflow depicted in Figure 2. The first step consists of defining the requirements and collecting the necessary data for the training process. After the data is collected, it needs preprocessing, which includes annotation, normalization, and filtering. Data pre-processing is a key step which enables proper prototyping of the AI algorithms. A design phase is also necessary, followed by a training and validation process. Once the AI algorithm is tested and validated, it can be deployed as an AI inference engine.

As a use case example, we are using the platform to build inference engines for ADTF. These engines are integrated within EB robinos, which include software modules for automated driving. Each filter encapsulates an AI algorithm, such as driving context understanding, synthetic data generation, object detection and recognition, or semantic segmentation of the driving scene.

The wrappers are exported as dynamically linked libraries and referenced inside the implemented filters. One core idea behind Elektrobit’s data science platform is modularization that incorporates having separated modules for each AI component. This approach encapsulates deep learning features inside ADTF and also helps developers with easily accessing and testing already existing methods, or with implementing their own solutions for their specific problems.

Optimizing the algorithms is another stage that was taken into consideration when the inference engines concept for artificial intelligence was designed. To improve performances in terms of computation speed, the best approach is to parallelize the workload using AI dedicated graphical computing units with low-level acceleration libraries (e.g. cuDNN, a library of primitives for deep neural networks introduced by NVIDIA).

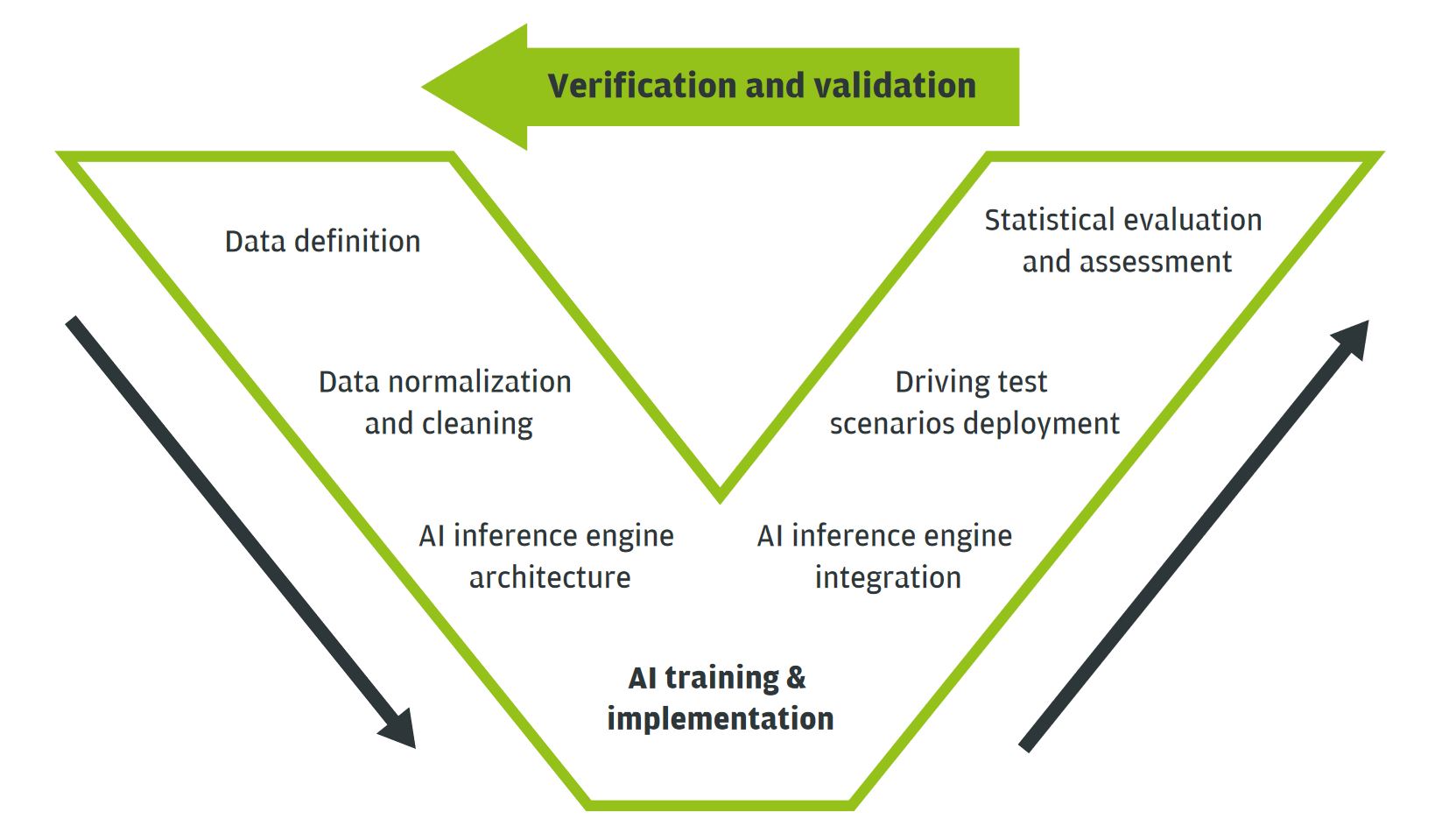

Automotive software engineering still demands a robust and predictable development cycle. The software development process for the automotive sector is subject to several international standards, namely Automotive SPICE and ISO 26262. Accepted standards, as far as the software is concerned, rely conceptually on the traditional V-model development lifecycle.

It’s important to approach deep learning from a more controlled V-model perspective in order to address a lengthy list of challenges, such as the requirements for the training, validation, and test datasets, the criteria for the data definition and preprocessing, and the impact for parameters tuning.

In Figure 3, we propose a V-model for prototyping and development within the platform. The data definition —normalization and cleaning — along with its exploitation through the AI inference engine architecture are crucial development phases since the DNN’s functional behavior is the combined result of its architectural structure and its automatic adaptation through training. The integration of the obtained inference engines associated with the deployment of the test scenarios and statistical evaluation provides the required assessment tools for such an architecture model.

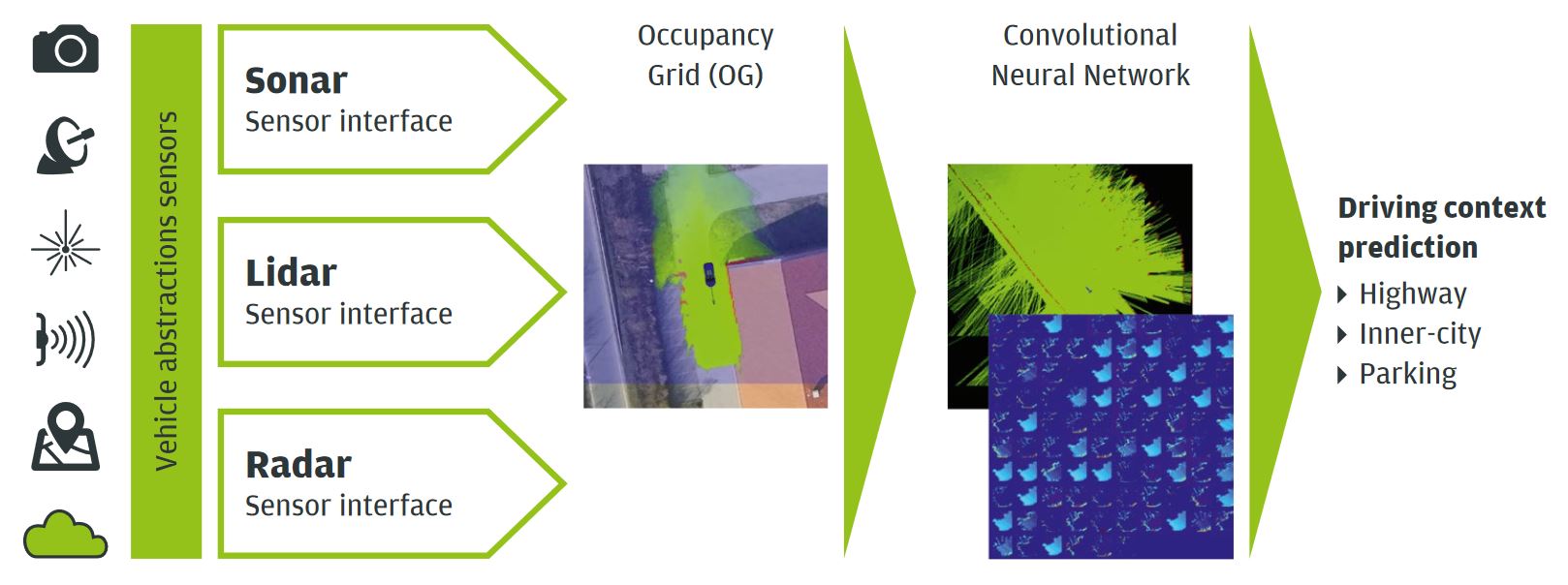

An inference engine for ADTF, developed using Elektrobit’s data science platform for artificial intelligence engines and the corresponding V-model, is Deep Grid Net (DGN). With its concept illustrated in Figure 4, DGN is a deep learning system designed for understanding the context in which an automated car is driving.

The DeepGrid Net (DGN) algorithm predicts this context by analyzing local Occupancy Grids (OGs) constructed from fused raw sensory data. The DGN approach proposed in this paper leverages on the power of deep neural architectures in order to learn a grid-based representation of the traffic scene. By using OGs instead of raw image data, we are able to cope with common uncertainties present in automated driving scenes, for example changes in the sensors calibration, pose, time, and latency.

This learned representation can then be used in different automated driving tasks, such as driving context understanding. The DGN algorithm can be deployed within EB robinos software modules which are used to create automated driving systems with artificial intelligence for cars.